So, I must admit, I was in a hurry and messed up when removing a datastore from my vSphere environment. Now I have an inaccessible, and orphaned, datastore in vCenter…meaning the datastore is gone on the storage side, and now I also cannot delete it from vCenter:

When attempting to delete from vCenter I receive the following error: “An error occurred during host configuration: Operation failed, diagnostics report: Not a known device: naa.[ID]“

What did I do to cause this issue? Well, I reconfigured storage on the NAS and removed/reconfigured the LUN/Extent before removing it and any VMFS datastore(s) from vCenter. This of course, left a datastore record in vSphere with no LUN on the storage side to map to it, causing an orphaned VMFS datastore.

The proper way would have be to remove the VMFS datastore from vCenter first, then remove the LUN from the NAS, like this KB states: https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.storage.doc/GUID-ED44059C-CB8C-4B4E-872A-15DABC96D395.html

Or if detaching a LUN, this KB: https://kb.vmware.com/s/article/2004605

Starting troubleshooting and removal of the orphan record, I first ran a rescan storage task on the complaining host, ESX03 (the target host in the task event) – this will rescan storage devices and VMFS volumes…but that did not resolve the issue. Rescan adapters (HBA) task ended with the same results, as expected.

Looking further into the issue, after running those tasks, my hosts do not show the inaccessible device in the storage adapter view:

I also restarted all vCenter services, but the record is still hanging around! So, you know what that means? This is a vCenter issue and I will need to dig in, and from past issues I have dealt with I know this is going to involve PSQL queries.

Ok…here we go 🙂

Turn on SSH in vCenter VAMI. Under the Access menu on the left, click Edit (on the right) and move the Activate SSH Login slider button to the right, then click OK

Now fire up PuTTY or your SSH client of choice and login as root

Launch shell by typing shell here, and shell access will be granted, and you will see the BASH shell prompt

Here is where I need to put a disclaimer and a warning: any time a user edits the vCenter Postgres database, sad things could happen. This is a how I solved my issue, not a recommendation or suggestion, and do not blame me if you mess up. Just in case I do mess something up, I made sure to take a snapshot and verified my file level backup (in VAMI) had completed successfully at the last routine backup scheudle.

From here I am going to dig into the PSQL (Postgres SQL) tool, which will assist in finding the values in the vCenter database

/opt/vmware/vpostgres/current/bin/psql -d VCDB -U postgres

Typing in \? will get you all the PSQL commands available. What I need to do is look for the records where the datastore entry is held. It is a good thing I am familiar with T/P-SQL commands and queries, but I need to know what table to query.

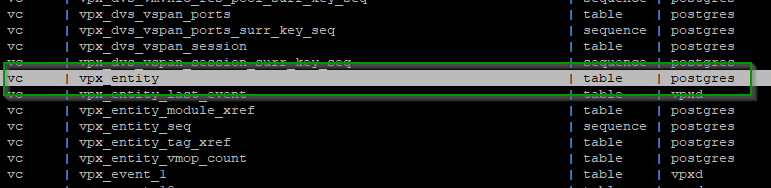

Using the \d command will list all the available tables. FYI, there are 1200 tables…so the list is long, but I know from doing this previously – and huge thanks and recognition to VMNINJA for this article that has helped me resolve similar issues in the past: Remove Inaccessible datastore from inventory | vmninja (wordpress.com) the data is held in the vpx_entity table

Now I can run a SELECT statement to view the information and data in that table, including the name of my inaccessible datastore

SELECT id FROM vpx_entity WHERE name = 'TrueNAS-500GB-03';

And there it is, it lives in the PSQL database as id=’15’. Is there anything else connected to this datastore? We can also run this PSQL query

SELECT * FROM vpx_ds_assignment WHERE ds_id=15;

From the ‘entity_id’ column it appears three other entities, 21, 8, and 46 are using this datastore. The ‘entity_id’ field refers to 3 of my ESXi hosts, which are in a connected state, but not mounted. That does match up with what I see in the vSphere GUI – note, hosts are connected, but the VMFS is orphaned…

Hmm, so there is a plot twist here…I was able to solve the issue, or rather, the issue resolved itself.

Before running PSQL queries to manually delete the ds_id, I ended up going back to each host in the list above, selecting the ISCSI adapter and rescanned the storage one more time before attempting to remove the datastore via SSH/CLI.

And do you know what?? The inaccessible datastore removed itself from the list!

Going back into the VCDB tool, I also verified there are no other datastores attached with id of 15.

So, in summary – if there’s ever a situation where there’s an inaccessible datastore, the datastore (ds_id) must not have any associated entities (entity_id) in the vSphere DB. Running the VCDB tool helped me in finding out what hosts were still associated to the datastore, which was not visible in vCenter. In the end, after running a storage rescan on each host, vSphere removed the orphaned datastore from the list of VMFS datastores in vSphere.

I much prefer a rescan over manually deleting fields and values in the vCenter DB!

Thanks for reading – please comment down below if any of this helped or if you have ever run across this issue.

Cheers!!

Leave a Reply